Visuomotor Policy Learning with Gaussian Splatting

More details coming soon ...

I am a Master student at Carnegie Mellon University (CMU) Robotics Institute and a member of Robots Perceiving and Doing (RPAD) Lab, advised by Prof. David Held.

My research lies at the intersection of Computer Vision and Robot Learning. I'm particularly interested in developing methods that enable robots to acquire generalizable manipulation skills from visual demonstrations through geometric reasoning.

Previously, I obtained my Bachelor of Science in Electrical Engineering with a minor in Computer Science from University of Illinois at Urbana-Champaign with Highest Honors. During my undergraduate studies, I joined the Human-Centered Autonomy Lab as a research assistant, advised by Professor Katie Driggs-Campbell. I also joined The Chinese University of Hong Kong and the Ren Lab as a summer visiting scholar, advised by Professor Hongliang Ren.

You can contact me at lyuxingh at andrew dot cmu dot edu.

More details coming soon ...

A hierarchical object-centric point diffusion framework that combines dense GMM global initialization with disentangled geometry and frame diffusion to deliver SOTA precision, multi-modal coverage, and generalization in both rigid and non-rigid placement tasks.

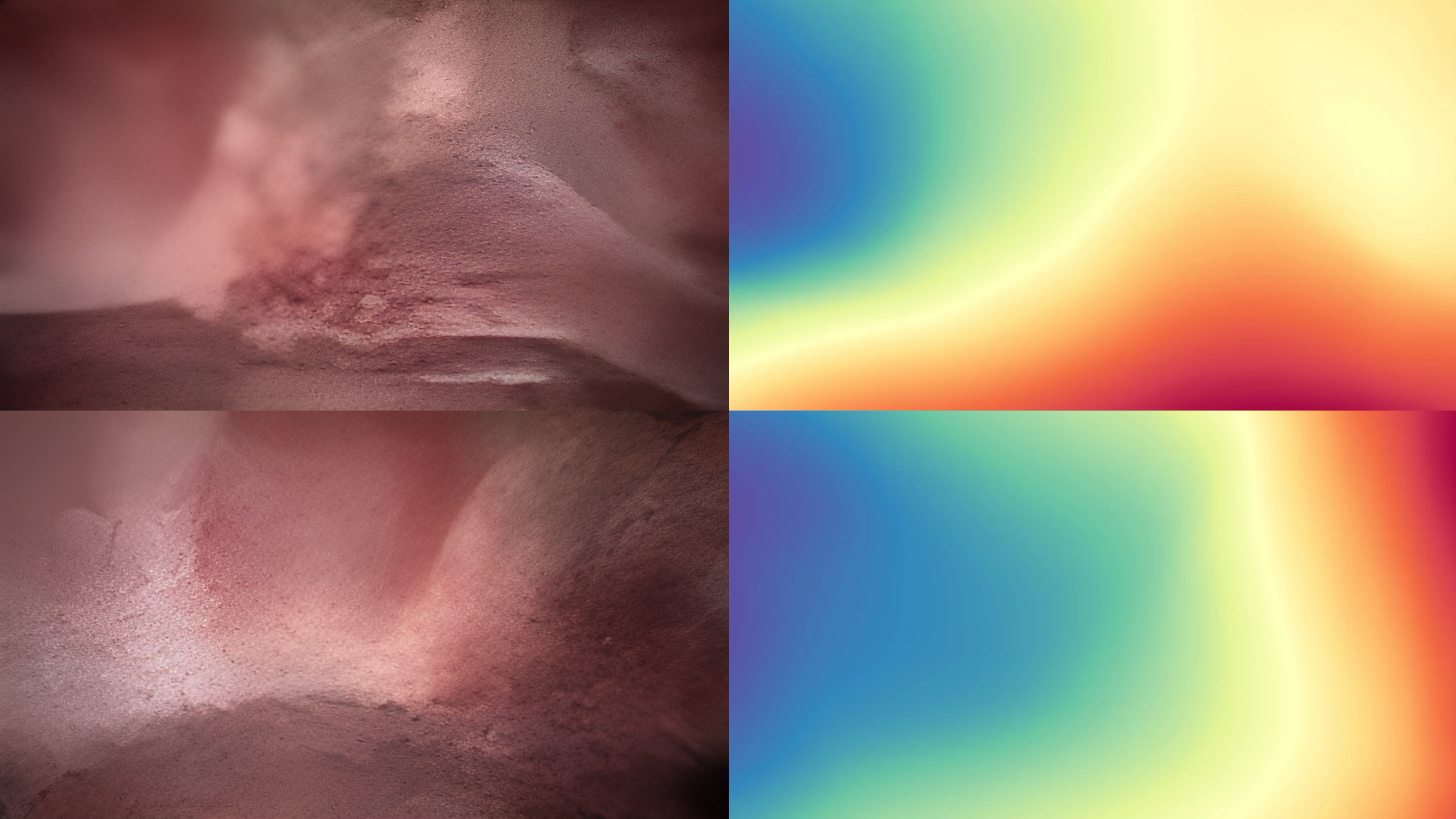

Handbook of Robotic Surgery, 2024

An end-to-end surgical data automation and scene-reconstruction pipeline that assembles an in-vivo GI dataset and trains a pose-free NeRF to deliver dense 3D representations despite specular surfaces and limited data.

The National Center for Supercomputing Applications (NCSA)

An interactive holographic display system that uses gesture control to visualize volumetric models (chemical’s electron density, etc.).

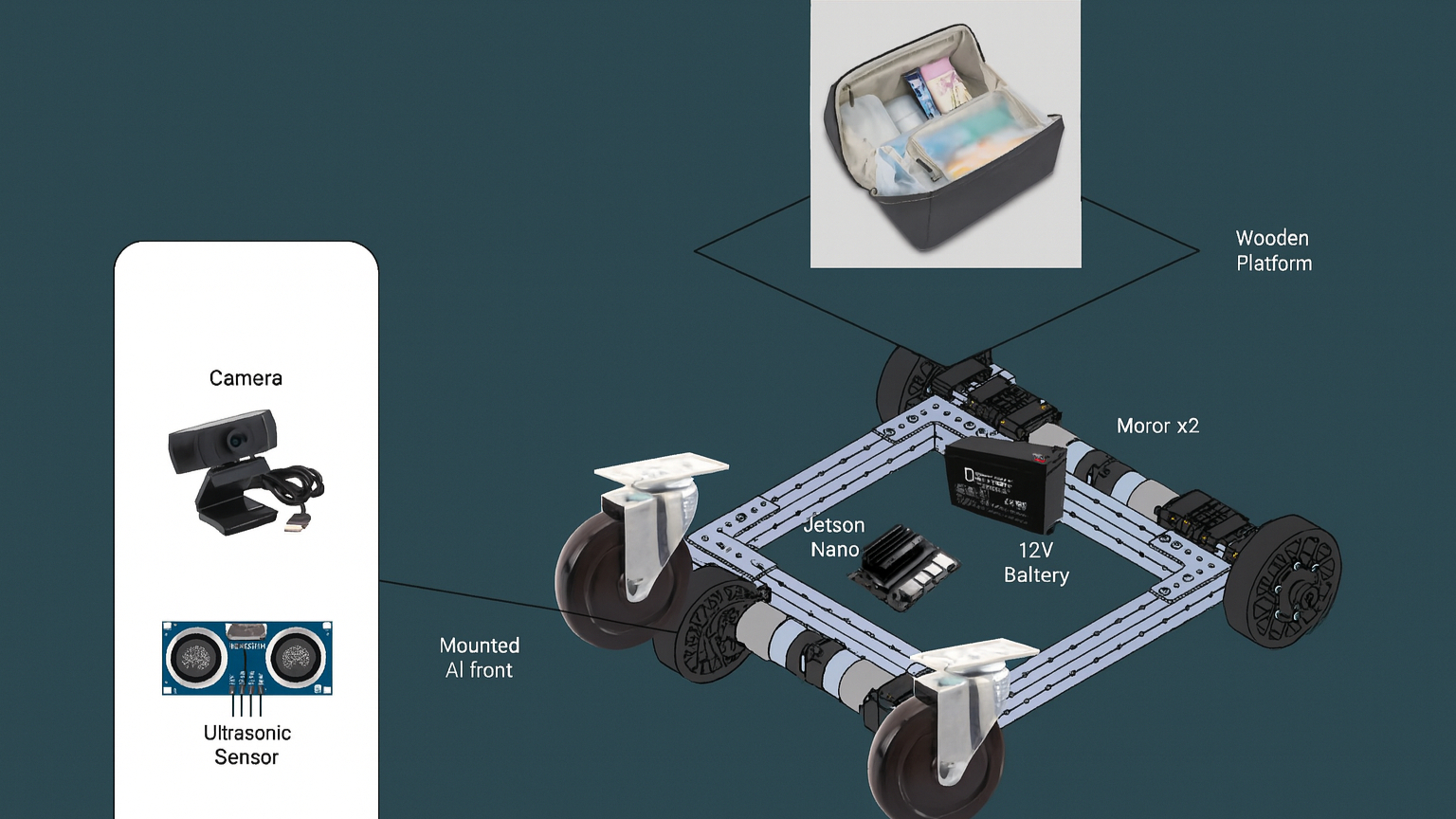

An auto-following luggage platform (AutoLug) that integrates owner registration and onboard vision-based identification to enable safe, collision-free following, offering excellent affordability, versatility across luggage types, and reusable platform functionality compared to conventional smart suitcases.